Read time: 5 mins

Workshop “Horizontal/Vertical Standardisation for AI”

21 February 2022

Key takeaways & Implications for Industry and Society

Europe needs to bring the European angle at international level, rather than create EU-specific standards (in line with the recent path defined by the European Standardisation Strategy that looks to turn Europe into a standard-setter, rather than a standard-taker entity as highlighted by internal Market Commissioner Thierry Breton during the press release announcing the EU Standardisation strategy on the 7th February 2022) . Lack of alignment can lead to uncertainty and unbearable costs for companies that will ultimately translate into higher costs for society.

The workshop organised on the on the 21st of February 2022, by the European Commission DG GROW (Directorate General for internal market, industry, entrepreneurship and SMEs) looked to tackle some of these issues at the “Horizontal/Vertical standardisation for AI”. The event gathered representatives from different European Standard Organisations (ESOs), societal stakeholders and actors involved in various horizontal and vertical sectors to discuss the current state of play of Artificial Intelligence and corresponding efforts carried out by the Commission.

The European AI Act (issued in April 2021) sets out horizontal rules for the development, commodification and use of AI-driven products, services and systems within the territory of the EU. The draft regulation provides core artificial intelligence rules that apply to all industries.

Antonio Conte, ICT Standardisation Policy Officer at DG GROW of the European Commission, opened the event by pointing out how the AI Act is a horizontal framework to provide harmonisation of AI across different sectors. The proposal aims also at defining the requirements applicable to the development of a set of AI systems to be placed in the market. The EC wants also to ensure the future AI roadmap to effectively serve the needs of all Standard’s vertical sectors.

Irina Orssich (Head of Sector AI Policy at the EC), continued focusing on the undeniable benefits of AI for society but also on the correlated threats to safety and fundamental rights that need to be taken into account. It included a chapter on International Cooperation and a legal framework to regulate the AI in its entirety, creating a global level playing field. “It’s important to have a risk management system, high quality training and validation data to avoid bias, and provide the user of the system with correct info on how to use the system.”

At this point the discussion turned to the ESO’s point of view on the matter.

Sebastian Hallensleben, CEN-CLC JTC 21 Chair, underlined few key-elements to be considered:

- The usage of AI vs the development of engineering of AI.

- The European vs international level: a complex situation where certain priorities in Europe are different than the ones at international level.

- Different interests of global vs regional stakeholders.

- Technical vs societal concerns.

- Different regulatory regimes related to different standardisation communities.

However, there’s also a large opportunity deriving from the interconnection between horizontal and vertical sectors. Horizontals are largely inspired by specific Use Cases, needs or application scenarios that are actually vertical needs. The fact that we have so many vertical issues is an actual chance to make the development of horizontal standards easier for transversal sectors.

Patrick Bezombes, CEN-CLC JTC 21 Vice-chair, stressed the importance to enable SMEs and start-ups and the whole industrial ecosystem to build the competences to assess the conformity of their AI systems as well as the necessity to simplify as much as possible the quantity of developed standards. It is nonetheless crucial to keep a transversal approach in AI and avoid the risk to work in a silo.

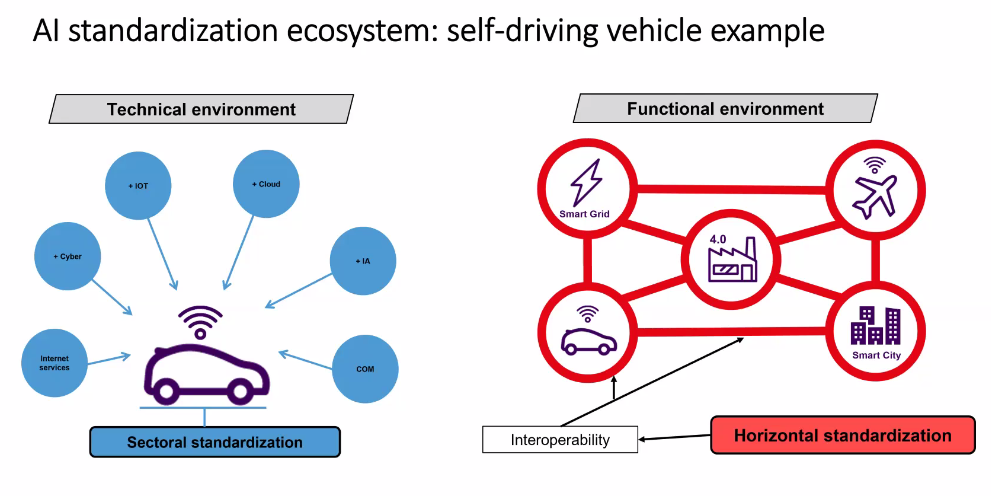

The horizontal/sectorial cooperation needs to be strengthened (example of self-driving vehicles: they rely on a wide array of technical standards for the development but the actual application spans across multiple horizontal domains).

Lindsay Frost (ETSI OCG AI Chair) and George Sharkov (ETSI ISG SAI Vice-chair) reminded how Consistency across different domains and the legal aspects are fundamental. Specifically, ETSI aims to handle specific needs for AI:

- To include ethical requirements in AI usage (e.g. for eHealth, Privacy/security).

- To harness AI for optimization of ICT networks.

- To ensure reliability through appropriate testing of systems using AI.

- To overcome AI-related security issues (enhancement of security measures).

- To better manage and characterize data, including from IoT systems, widely used by AI.

- Mitigation against AI.

Moving towards the societal stakeholders perspective, Chiara Giovannini (ANEC Deputy Secretary-General), noted the possible risks for consumers, especially in regard to the “Conformity Assessment” aspect. The reference to the Conformity Assessment included in the AI ACT (article 6) “poses the consequence that the majority of AI consumer products (such as toys or connected appliances) would undergo only the manufacturer self-assessment, even if posing a potential risk to consumers”. “We think that new specific rules should be adopted in the draft AI regulation, to make the appropriate risk assessment of all AI products, taking into account the nature of the hazard and the likelihood of its occurrence”, concludes Mrs Giovannini.

Alpo Varri, CEN/TC 251 – WG II Convenor, steered the focus on some feedback received from the Finnish parliament with a view to reinforce the current AI draft regulation, mostly on augmented clarity about the responsibilities of the stakeholders. Health systems manufacturers need a robust quality system and the continuous need to test performance of their products. The European Health Data Space holds a promise of ample amounts of AI training material but the adoption of a too restrictive regulation may hinder AI development.

An insightful and conclusive consideration came from Koen Cobbaert (Philips Senior Manager): standards are essential to support EU legislation but alignment is likewise necessary. Alignment is required on:

- Terminology

- Quality management systems

- Risk management systems

- Accuracy, robustness and cybersecurity

- Transparency and information to users

- Human oversight

- Data governance