Human-in-the-loop and OETP

Human-in-the-loop (HITL) is a design pattern in AI that leverages both human and machine intelligence to create machine learning models and to bring meaningful automation scenarios into the real world. With this approach AI systems are designed to augment or enhance human capacity, serving as a tool to be exercised through human interaction.

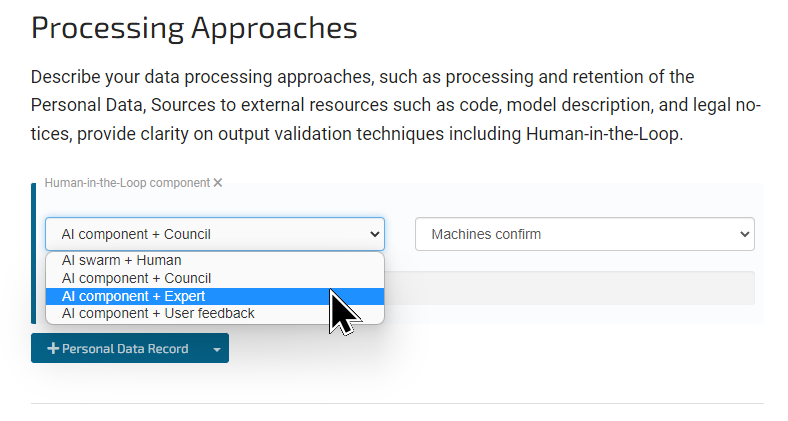

Open Transparency Protocol offers the following model for HITL disclosure to then allow accessing each system HITL properties using the oetp:// URI scheme

---

"Big Tech" is an important driver of innovation, however, the consequent concentration of power creates Big Risks for the economy, ethical use of technology, and basic human rights (we consider privacy as one of them).

A decentralization of SBOM (Software Bill of Materials) and data processing disclosures was earlier described as a key requirement for the Open Ethics Transparency Protocol, OETP.

Fulfillment of this requirement allows disclosure formation and validation by multiple parties and avoids harmful concentration of power. To allow efficient decentralization and access to the disclosures of autonomous systems, such as AI systems powered by trained machine learning models, the vendor (or a developer) MUST send requests to a Disclosure Identity Provider, which in turn, processes structured data of the disclosure with a cryptographic signature generator, and then stores the integrity hash with the persistent storage, for example using Federated Identity Provider. This process was described in the Open Ethics Transparency Protocol I-D document, however, the exact way how to access disclosures was not described there. The specification for the RI scheme described here closes this gap.

My recent work builds on top of our previous contribution to IETF and targets to simplify the access to AI disclosures, and more generally to disclosures of autonomous systems.

Thanks to the hard work of EUOS Technical Working Group on AI (TWG-AI) Chair Lindsay Frost (ETSI, NEC) and the executive committee members Fergal Finn, Karl Grün, Jens Gayko, Sebastien Hallensleben, Stefan Weisgerber and Stefano Nativi, the EUOS had its first deliverable in the form of a draft AI Risk Landscape document which Lindsay submitted to the EC DG Joint Research Centre (JRC). A joint EUOS and DG JRC initiative will complete a 2nd draft in March for the European Commission's AI Watch and in parallel complete the Global AI Standards Landscape Assessment which is under way. Contact

Thanks to the hard work of EUOS Technical Working Group on AI (TWG-AI) Chair Lindsay Frost (ETSI, NEC) and the executive committee members Fergal Finn, Karl Grün, Jens Gayko, Sebastien Hallensleben, Stefan Weisgerber and Stefano Nativi, the EUOS had its first deliverable in the form of a draft AI Risk Landscape document which Lindsay submitted to the EC DG Joint Research Centre (JRC). A joint EUOS and DG JRC initiative will complete a 2nd draft in March for the European Commission's AI Watch and in parallel complete the Global AI Standards Landscape Assessment which is under way. Contact